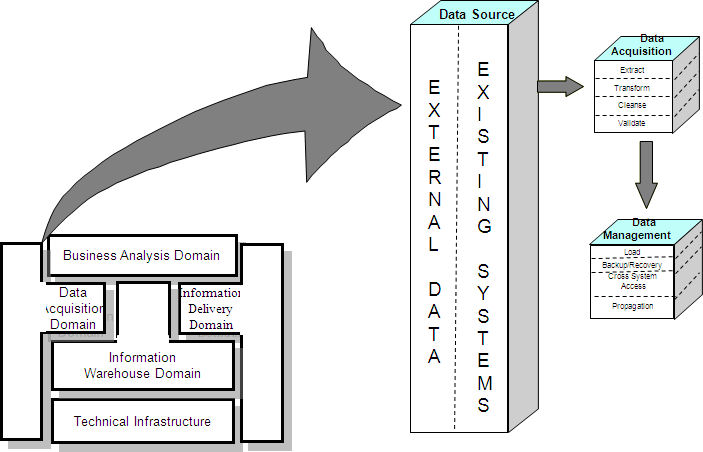

The Data Acquisition Domain includes Enterprise Source Data and the processes necessary to periodically move data from the external data sources into the Information Warehouse.

The components of the Data Acquisition Domain are:

- Data Sources

- Data Acquisition

3.1. Data Sources

Data Sources are the operational files that are used to perform the business of the enterprise. These include:

- Application master files

- Application control files

- Historical data files

- Transaction and event files

They may also include:

- Departmental data files

- Individual data files

In all cases metadata describing the location, meaning and content of the information to be used from these sources must be captured.

3.2. Data Acquisition

Data Acquisition employs the business rules metadata captured in the Business Analysis Domain to extract the required data, prepare it and integrate it into the Information Warehouse.

The components of Data Acquisition are:

- Data extract

- Data transform

- Data validate

- Data cleanse

- Data load and update

- Data propogate the data

3.2.1. Extract

The Data Extraction function performs the process of selecting data from multiple systems of record. The systems of record can exist in the Operational Environment and/or the External Environment.

Data Extract features include:

- Selectively extracting records from a file and/or fields from a record

- Accessing variable/fixed length records/fields

- Processing data extracted from source systems as well as directly reading source data structures

- Reading and processing external data

- Selecting changes by time or event

- Reprocessing error records

- Logging data anomalies and continuing processing

- Maintaining data and referential integrity

- Satisfying quality control and security requirements.

Additionally, Data Acquisition Extract supports:

- Source Database Management System (DBMS)/platform and file structures

- Metadata functionality (e.g. import source and target definitions from the Metadata Repository for program implementation)

- Business rule definition, Boolean operations, and programmable exits

- Audit trails and control counts, as well as error and exception handling

- Checkpoint and restart processing

- Data compression and encryption

- Graphical User Interfaces (GUI) front-ends.

3.2.2. Transform

Data Transformation applies business rules and technical requirements to extracted data to create standardized, consolidated information.

Data Transformation features include:

- Accessing variable/fixed length records/fields

- Correcting data by applying business rules

- Checking content of data and correcting errors

- Defaulting field values when no source value exists

- Performing translation and substitution of values

- Deriving fields from other fields

- Performing domain and referential integrity processing

- Reprocessing error records

- Satisfying quality control and security requirements

- Creating and logging control information to support data quality control

- Summarizing, aggregating, and deriving data.

Additionally, Data Transformation supports:

- Reformatting of data, conversion of data types, date fields, time fields, code fields, and data formats

- Null transforms

- Merging, sorting, and splitting of data from multiple sources

- Audit trails and control counts, as well as error and exception handling

- Checkpoint and restart processing

- Automated links to the Metadata Repository

- Data Transport methods, Data Load/Update, and the target DBMS.

3.2.3. Validate

Data Validation addresses the monitoring and verification of data throughout the acquisition process.

Data Validation features include:

- logging mechanism, with statistical controls

- a flexible exception reporting facility

- balance controls to the system of record

- schedule and time verification of business data within the Information Warehouse

- action/response triggers

In addition, it has the ability to perform the following checks:

- dependency

- edit

- authentication

- referential integrity and domain

- redundancy

- completeness and reasonableness (e.g. open account before being born).

3.2.4. Cleanse

Data Cleansing addresses the correction of data exceptions prior to the final acquisition process. Data Cleansing usually involves human intervention to determine whether the exception is because of a short coming in the corporate data policy, an error in the system of record or an error in the transformation and/or validation processes.

Data cleansing features include:

- Evaluation of exceptions against corporate data policies

- Modification of the data policy to recognize the exception as valid.

- Notification of a need for correction in the system of record

- Modification of the transformation and/or validation rules

3.2.5. Load and Update

Data Load and Update processes the data prepared by Data Transformation and populates the Information Warehouse.

Data Acquisition Load features include:

- Loading empty tables, appending data to loaded tables, and applying changes to existing rows

- Applying changes by time or event

- Time stamping of rows

- Loading a large number of rows efficiently

- Invoking the creation of indexes during a load process or deferring index creation

- Invoking purging/archiving/back-up of selected data

- Turning logging on and off

- Logging data anomalies and continuing processing

- Capturing and reprocessing error records.

Additionally, Data Acquisition Load supports:

- Parallel execution of loads and associated functions

- Audit trails and control counts, as well as error and exception handling

- Checkpoint and restart processing

- The DBMS of the Information Warehouse and Data Acquisition Transform.

3.2.6. Propagate

Data Propagation creates or stages data to the Information Staging component of the Information Delivery Domain. Propagation has two forms, push and pull propagation.

Push propagation includes the following types:

- Scheduled

- Event triggered

- Update triggered

Pull propagation is triggered by a business user action.